[NEAR] REAL-TIME ANALYTICS IN NEARLY NO TIME: DEVELOPING YOUR STRATEGY USING AWS TOOLS

Data are only as insightful and important as the person (or perhaps machine) who is analyzing them. In the data-centric climate of business decision-making, having a dynamic and reactive solution at one’s disposal that provides business intelligence is paramount to success in the marketplace. Building a fully integrated solution that has the storage, compute, database, and analytic features can be costly and difficult to manage. An alternative to building the solution yourself is to leverage the services offered by Amazon Web Services (AWS). AWS provides a plethora of tools that enables the ingestion, storage, and analysis of data. Gaining insight from data in near real time equips decision makers with facts as quickly as they are generated.

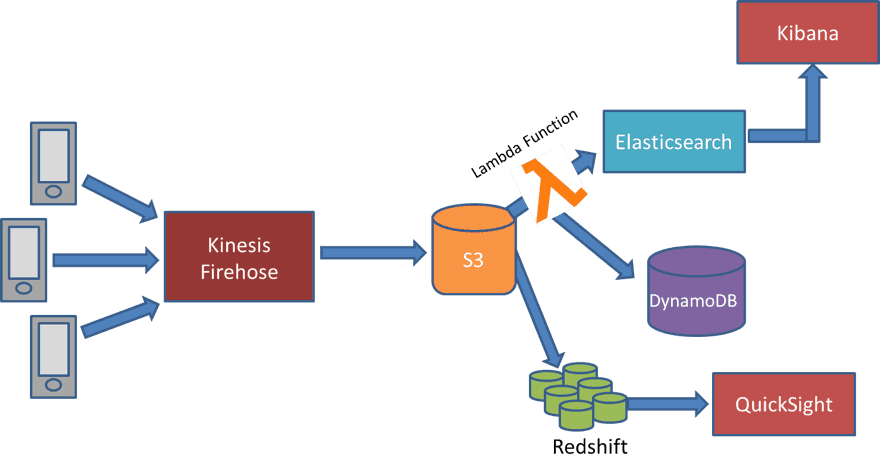

Below is a low-implementation-time strategy to have near real time analytics by using tools that are highly integrated within the AWS ecosystem. Within this strategy are three main steps: Ingest the data; aggregate the data; and analyze the data.

The AWS tools being used for this strategy are:

- Kinesis Firehose – A tool that automatically loads stream data into S3 and Redshift

- Lambda Functions – An event-based compute service that allows you to run code without provisioning the servers on which it runs

- S3- An object-based storage solution that is highly scalable and secure

- Elasticsearch Service – A managed service that provides search and analytic engines for data stored in clusters

- QuickSight – A tool that performs rapid business intelligence analytics and data visualization

- Redshift -A managed service that provides data warehousing capabilities

- DynamoDB – A managed NoSql database to store metadata from the Redshift environment

These tools work together to ingest data – clickstreams, Twitter trends, stock prices, transactional data, etc. – in real-time. The architecture presented below not only offers real-time analysis, but also stores data in a data warehouse for more in-depth analysis in the future.

The first step to get near real-time analytics using AWS is to create an Elasticsearch cluster. While this is not the first step in the actual data analysis process, it is imperative to have this cluster set up prior to beginning of the data ingestion. This cluster will house the data for analysis. Elasticsearch easily integrates with Kibana – an open-source data analytic and visualization platform – built into it. Elasticsearch Service receives the S3 file content that has been indexed by a Lambda function, which is later used for data visualization and analytics. It is important to know what data you plan on collecting before setting up Elasticsearch, as this will dictate the specifications for the cluster and where to point the Kinesis Firehose.

1. Ingest the data

The second step in the process is to create an Identity and Access Management (IAM) role for Kinesis Firehose. The IAM function is a core capability to control security and access in AWS. IAM policies can be used to restrict users from specific actions, specific resources, and what the effect will be if a user requests access to restricted resources. This is a pivotal piece for securing data in AWS.

Kinesis Firehose will ingest the data that is generated and then store it in S3. Creating an IAM role for Kinesis Firehose allows it to call AWS and access its resources and services, which is required before the data is ingested. Kinesis Firehose captures and automatically loads data for near real-time analysis. Kinesis Firehouse also enables the compression of data according to your customized specifications, as well as encryption that is integrated with AWS Key Management Service (KMS).

2. Aggregate the data

The next step in getting near real-time data analytics is to create a Lambda function. Lambda is an AWS service that allows you to run code without provisioning a server, and only charges for the compute time you consume. This is part of the aggregation piece. Lambda automatically runs the code when a specified action occurs. In this case, the Lambda function is run automatically after the S3 file is created. The Lambda function reads the content of the S3 file and parses the content and indexes the S3 file to Elasticsearch.

Data is also loaded from S3 to Redshift, a data warehouse service. Your Lambda function that indexed the S3 file can also index the metadata to DynamoDB. You can use AWS QuickSight to analyze and use visualization techniques from RedShift. Also, the data can be queried much later after collection using several different SQL clients. You can create a pipeline using multiple kinesis streams/firehoses to paralelly load the data from S3 to Redshift. Because your queries may happen at later times, Redshift serves as a mechanism for deeper analysis that does not necessarily need to be near real time.

3. Analyze the data

The final step is to analyze the data. Kibana is one tool that is automatically integrated with Elasticsearch to enable data analysis. The other tool for analyzing data is AWS QuickSight, a new product from Amazon that automatically discovers data stored in AWS services such as S3 and Redshift. QuickSight not only recognizes the data source and data type that it’s fed, but is also able to build relationships between the data to help visualize your data story. From its provided data from the Redshift environment, QuickSight offers recommended data visualization techniques, as well as suggestions for different variables to group and measure against. This all happens within 60 seconds from the start of a QuickSight analysis.

While using Kibana is more involved than QuickSight, it offers more customizable and in-depth analysis. Kibana could be used to supplement business intelligence that was discovered using AWS QuickSight. Kibana ingests data in a JSON format, the same format which stores the data is stored for Elasticsearch. Kibana comes pre-installed on Elasticsearch, so all you have to do is define the index pattern in Kibana that matches the index in Elasticsearch. This can be done with multiple indices too. Specific steps in defining indices can be found on the Kibana product page here.

Data by themselves are troves of undiscovered information and insight, rendered useless without a method to extract and analyze them. Using a strategy such as the one presented above will enable a business to quickly capitalized on the data it is collecting.

If you’re interested in learning more about how to capture real-time analytics with AWS tools, or any other AHEAD products and services, schedule a time to meet with us in our Lab and Briefing Center. For another AWS service that AHEAD offers, check out the brochure below.