A STEP-BY-STEP GUIDE TO BUILDING AND DEPLOYING GOOGLE CLOUD FUNCTIONS

Why Use Google Cloud Functions?

Google Cloud Functions is a Function as a Service (FaaS) that allows engineers and developers to run code without worrying about server management. Cloud Functions scales as needed and integrates with Google Cloud’s operations suite (such as Cloud Logging) out of the box. Functions are useful when you have a task or series of tasks that need to happen in response to an event. Cloud Functions can be invoked from several events, such as HTTP, Cloud Storage, Cloud Pub/Sub, Cloud Firestore, Firebase, and in response to Google Cloud Logging events. With Cloud Functions, monitoring, logging, and debugging are all integrated, and functions scale up and down as required. This is especially useful when you want to focus on writing code but don’t want to worry about the underlying infrastructure. You can write functions in the Google Cloud Platform console or write them locally and deploy using Google Cloud tooling on your local machine. Note that Cloud Functions has a few limitations, such as a nine-minute execution limit. Cloud Functions can be a good choice for short-lived requests that do one specific task in response to another event. In this step-by-step guide, we’ll walk through an example scenario that uses Google Cloud Functions, Google Cloud Storage, Google Cloud Vision API, and Google BigQuery. The purpose of the function will be to pass an image to the Vision API/AI and obtain information about it for later analysis. We’ll set up a cloud function in Python that listens for a new upload event to a specific Google Cloud Storage bucket. Next, the script will take that image and pass it to the Google Cloud Vision API, capture the results, and append them to a table in BigQuery for further analysis. This example will use sample images but can be modified to read documents containing text.Google Cloud Platform Project Setup

To begin, you will need a Google Cloud Platform project with a few APIs enabled. If you are not familiar with setting up a Google Cloud Platform project, click here to learn how to do so. During this tutorial, we will be using gcloud to deploy our functions and Python to write the script. You could also use Node.js, Go, Java, .NET, or Ruby if you prefer. Be sure your environment is set up to develop scripts on the Google Cloud SDK with Python using the links above.Enable APIs

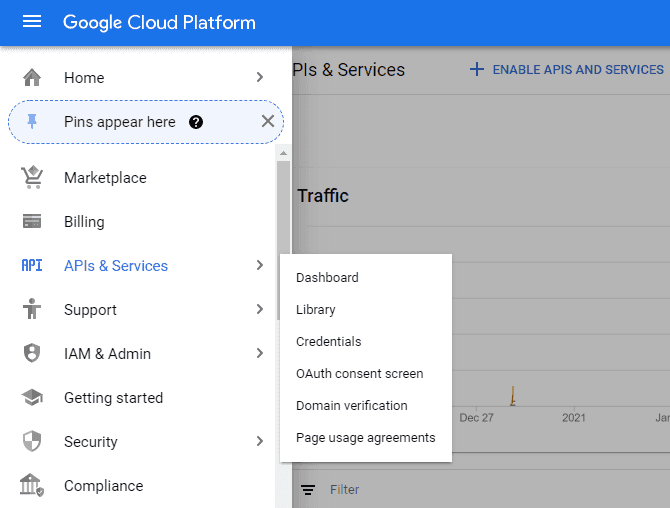

Once your project is set up, you’ll need to enable a few APIs, specifically the Cloud Functions API, the Cloud Vision API, and the BigQuery API. To enable those APIs within your project:- Select the hamburger menu from the upper left-hand corner of the Google Cloud Platform console.

- Navigate to APIs & Services.

- Select Library. (image 1 below)

- On the Library page, search for each API mentioned above and enable them with the blue manage button. You will see an API Enabled label after it has been enabled successfully. (image 2)

- Ensure that the Cloud Functions API, the Cloud Vision API, and the BigQuery API are all enabled.

Image 2:

Set Up Cloud Storage

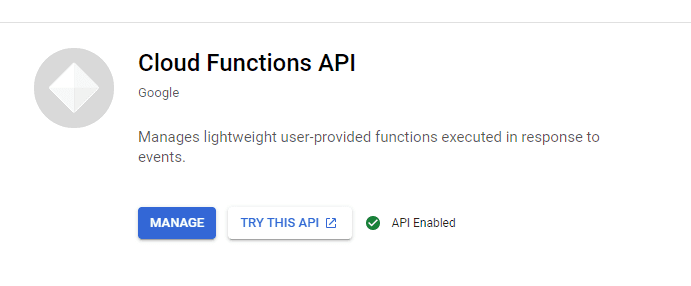

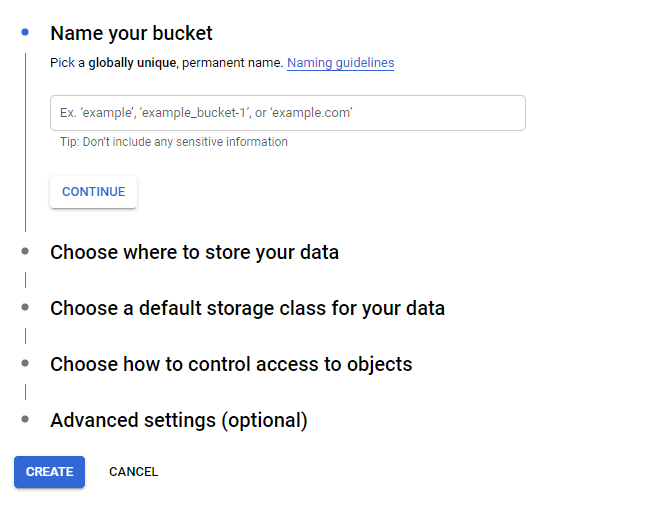

Once your Cloud Platform project is ready, create a Google Cloud Storage bucket. If you are not familiar with Cloud Storage buckets, they are uniquely named areas that provide fine-grained access to objects. They can be used for data storage, landing zones for user upload, or archives for data that need to be kept for extended periods. You can find more information about Google Cloud Storage here. To create a bucket:- Select the hamburger menu from the upper left-hand corner of the Google Cloud Platform console.

- Select Browser. (image 3)

- Select the blue CREATE BUCKET link at the top.

- Follow the creation wizard, keeping note of the bucket name you select. (image 4)

Image 4:

Create a BigQuery Table

Next, you’ll need to create a BigQuery table to contain metadata about the images that are extracted by the Cloud Vision API. BigQuery is Google’s data warehouse designed for analyzing petabytes worth of data using SQL and is often used for historical analysis, time series data, and other scenarios where SQL-like data can be retained for a long time with fast retrieval. Our table won’t quite hold petabytes of data, but you’ll see how easy it is to write to a table using Cloud Functions shortly. Our BigQuery table could be connected to a data visualization tool (such as Google’s Data Studio) for further analysis. In this example, we will keep it simple by capturing filename, URI, and generated labels and landmarks as well as the confidence that Cloud Vision has in the output.- Ensure you have a project selected in the GCP Console.

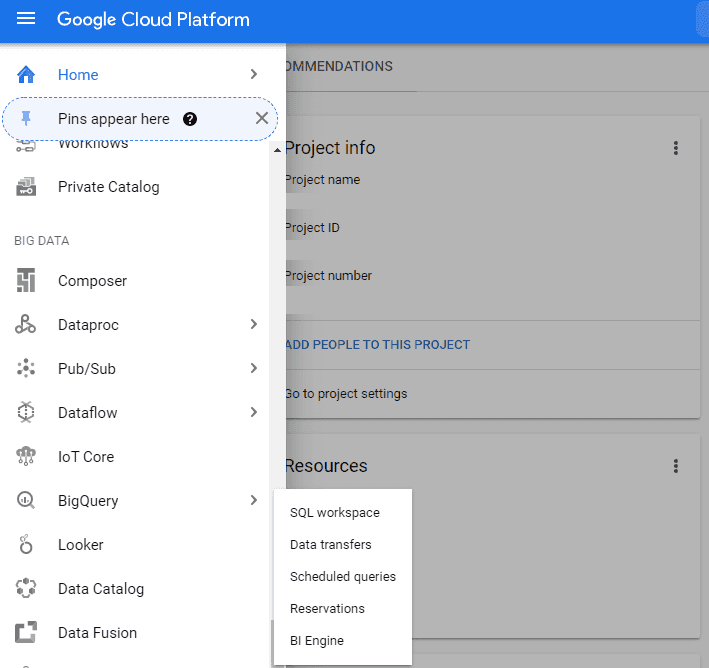

- Select the hamburger menu from the upper left-hand corner of the Google Cloud Platform console.

- Select BigQuery. (image 5)

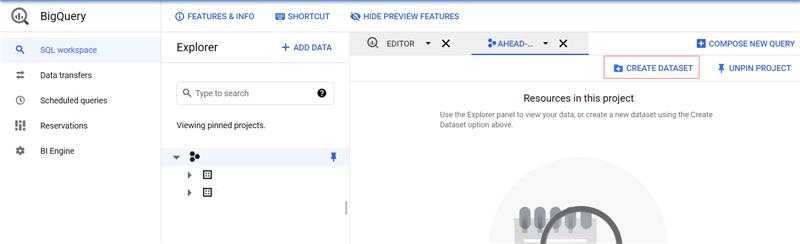

- Select CREATE DATASET from the left-hand side. (image 6)

- Give your dataset a name and leave all other values at default

- Press the blue Create Dataset button when you are finished (image 6)

Image 6:

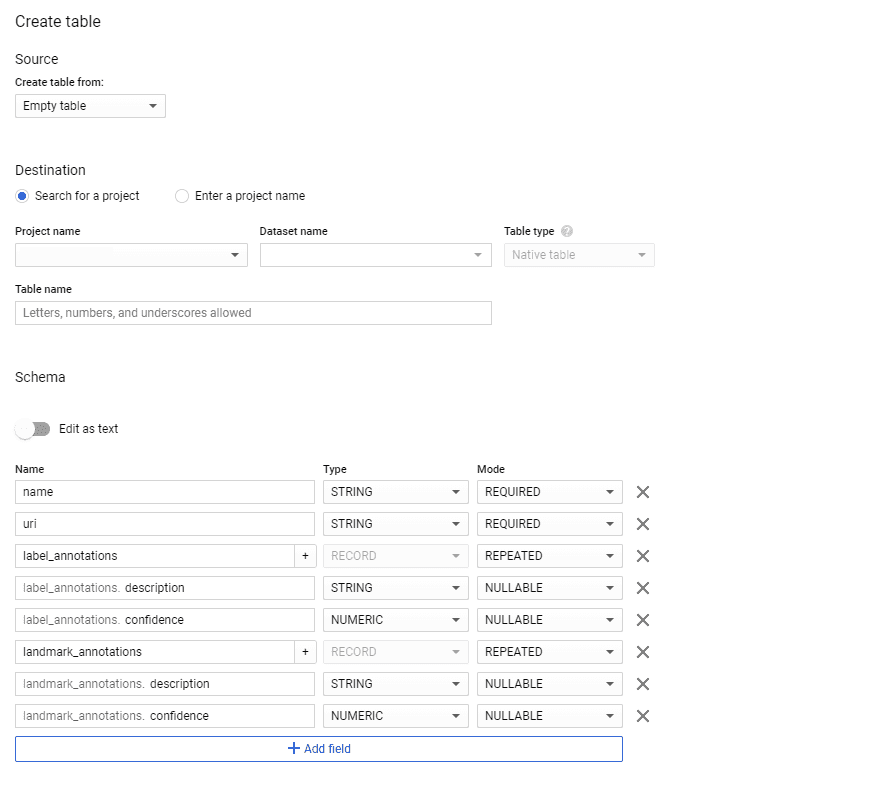

It is now time to create a table and set up a schema. The schema defines what type of data we will be placing into our table. In this case, we will have two STRING fields and two REPEATING fields to hold information about our image metadata.

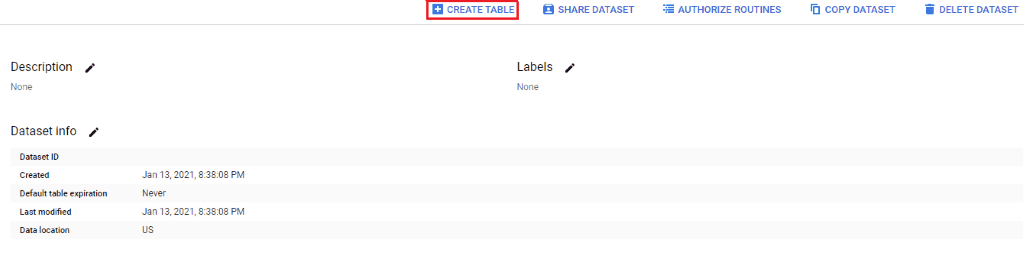

- From the Dataset page, press the blue CREATE TABLE button. (image 7)

- Give the table a name, and make sure that the Project Name and Dataset Name match your current project and dataset.

- Leave the remaining values at their default and define the schema as in image 8. Note: When adding properties to a repeated field, you will need to press the (+) button to add nested fields.

- Write down the dataset name and the table name, as we will need them for our script later.

Image 8:

Generate a Service Account Key

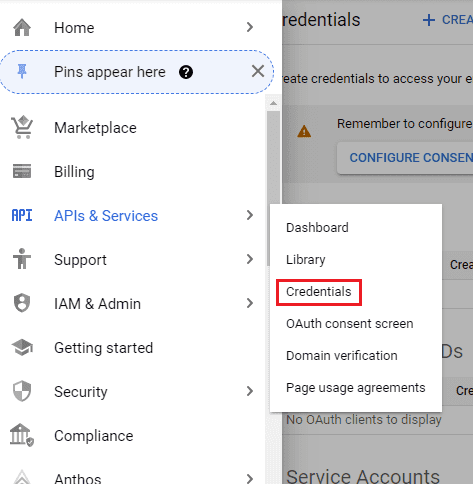

If you wish to develop locally prior to deploying the Cloud Function, we recommend you generate a service account key.- Select the hamburger menu from the upper left-hand corner of the Google Cloud Platform console.

- Select APIs & Services.

- Select Credentials. (image 9)

- Press the blue CREATE CREDENTIALS button and select Service account.

- Give the new service account a name and description then press Create.

- Set the Owner role on the service account and leave the other fields blank.

This completes setup of our Google Cloud Platform environment, and we are now ready to begin scripting!

Develop the Script

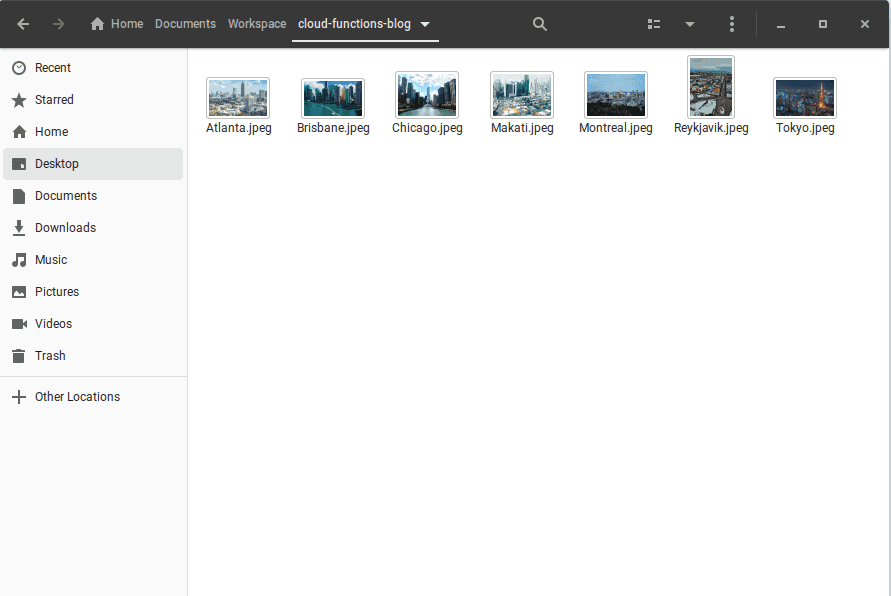

As discussed previously, this script will listen for new uploads to a Cloud Storage bucket and act on the newly uploaded item. In our example today, we will be using photos of world cities, but you could use other photos (such as a scanned invoice) if you were looking to parse other types of data. We will start by creating a new directory on our local machine.- Create a new folder

- Add some images for testing. We used photos of cities around the world. (image 10)

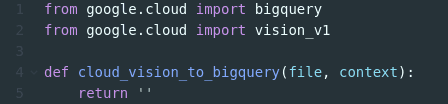

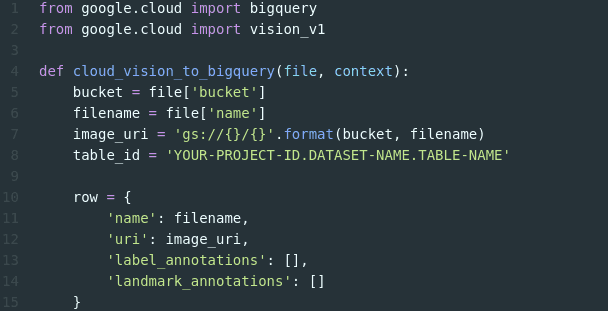

Next, create a new file in the same directory called main.py. This is the source code for the function you will be uploading to Google Cloud Functions. For now, go ahead and insert the following code:

We import the Google Cloud BigQuery and Google Cloud Vision libraries, which contain functions and properties we can use to interact with those APIs.

In this example, we are responding to a Cloud Storage upload event, so we pass two parameters to the function, which are a file variable and a context variable. The file variable holds a reference to the object that was uploaded, while the context variable can provide us information about the context in which the object was uploaded. For this scenario, we will be focusing on the file variable.

Now we will begin pulling information from the file variable and set up some other variables we’ll need later on. Modify your script to look like this:

The bucket and filename variables hold information about the bucket and filename strings, and the image_uri variable constructs the Google Cloud Storage URI that we will pass to the Vision API shortly. If you are not familiar with the format function, it injects strings for the braces it finds within a given string. Do not modify the image_uri variable for this example.

On line 8, you will need to modify the table_id variable to use your Google Cloud Platform project name, your dataset name, and your table name. Refer to the dataset name and table name you wrote down earlier. If you are not sure where to get those, look in the BigQuery section in the Google Cloud Platform console for your project. Finally, the row variable holds information about the image, such as labels and landmarks, that will ultimately be passed to BigQuery.

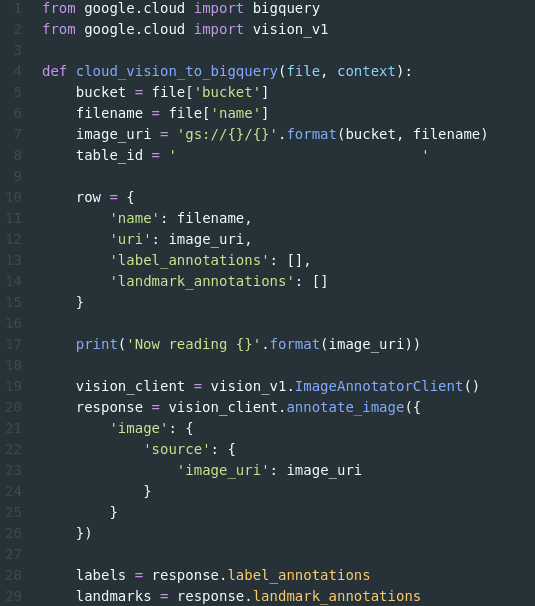

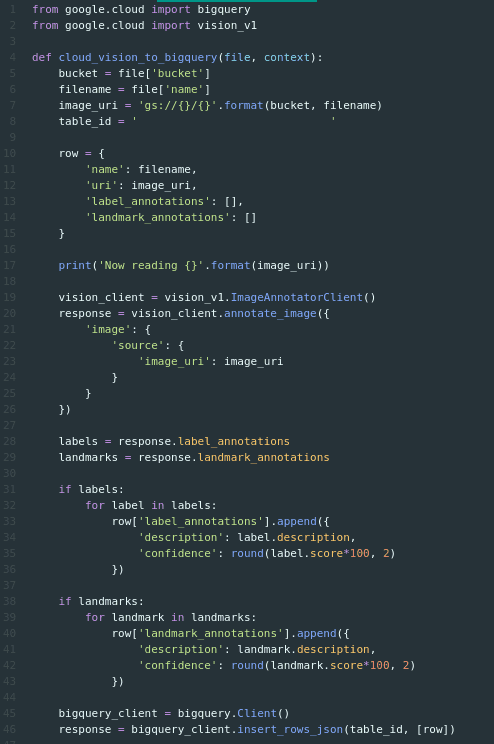

Once those variables are set, it is time to call the Vision API. Modify your script to look like this (I have edited the image to remove the reference to the table_id):

In the new code, we set up a client object, vision_client, that we will use to call the Vision API. We called the method annotate_image on the client and passed it a reference to the Google Cloud Storage object that triggered the upload event. Because this function will be contained in the same project as the storage bucket and the BigQuery table, we don’t have to worry about authentication. In a production system with multiple projects or environments, you would need to take additional steps to authenticate this code. Note further that we are not checking for an error response of the annotate_image call. In a production environment you would want to wrap this in a try block and gracefully handle or retry any errors as appropriate.

We are nearly complete with our script. All we need to do now is collect the label and landmark information (along with the Vision API’s confidence level), add it to our row variable, and then append it to our BigQuery table (I have edited the image to remove the reference to the table_id):

The two items to call out here are that we are rounding up the score to be on a scale of 100, and inserting the row as JSON by using the BigQuery API insert_rows_json method. We have now completed our script, and we are ready to deploy it to Cloud Functions for testing.

Deploy the Script

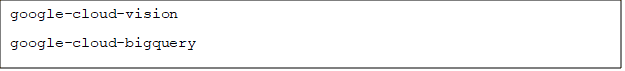

Before we can deploy, let’s make sure Google Cloud Functions can pull down all the dependencies required for our function to run properly. In your directory, create a new file called requirements.txt. This file should be in the same directory as main.py. Within requirements.txt, place the following two lines:

One of the ways to specify dependencies is to use the pip package manager for Python within Google Cloud Functions. When the function is deployed, Google Cloud Functions reads the data in requirements.txt and pulls down those dependencies. For more information on pip and how Google Cloud Functions uses it, review the documentation here.

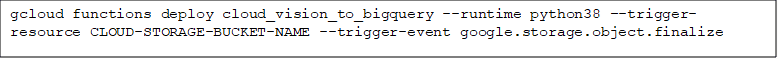

The script is now ready for deployment. Open a terminal window and navigate to the directory containing main.py. From the terminal, enter the following command:

The function will begin deploying and should complete within two or three minutes. If the deployment tool detects an error, it will show on the screen. If you receive an error, check your code and remediate any issues you find. If you get dependency errors, make sure you’ve updated requirements.txt as in the instructions above and that the file is in the same directory as main.py.

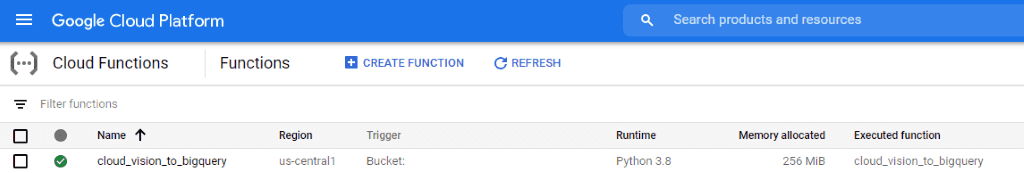

Let’s review the function deployment in the cloud console before we begin to test it.

- Select the hamburger menu from the upper left-hand corner of the Google Cloud Platform console.

- Click Cloud Functions. A list of all functions will appear on the screen. (image 11)

- Verify that the function has a green check mark to the left of its name, and that the bucket name matches the bucket you set up and specified in your deploy command.

Test Your Function

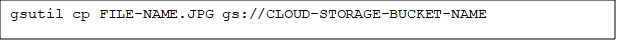

You are now ready to test your function by uploading an image to the bucket. We’ll use the gsutil command to upload one of the images. Within the directory containing your test images, execute this command in the terminal, where FILE-NAME.JPG and CLOUD-STORAGE-BUCKET-NAME are the names of the file you want to upload and the Cloud Storage bucket you created, respectively:

You will see output in the terminal as the file is uploaded and when it completes. If you run into an error, check permissions on your system. Once the file is uploaded, it is time to check our BigQuery table for the information that Cloud Vision was able to provide for labels and landmarks.

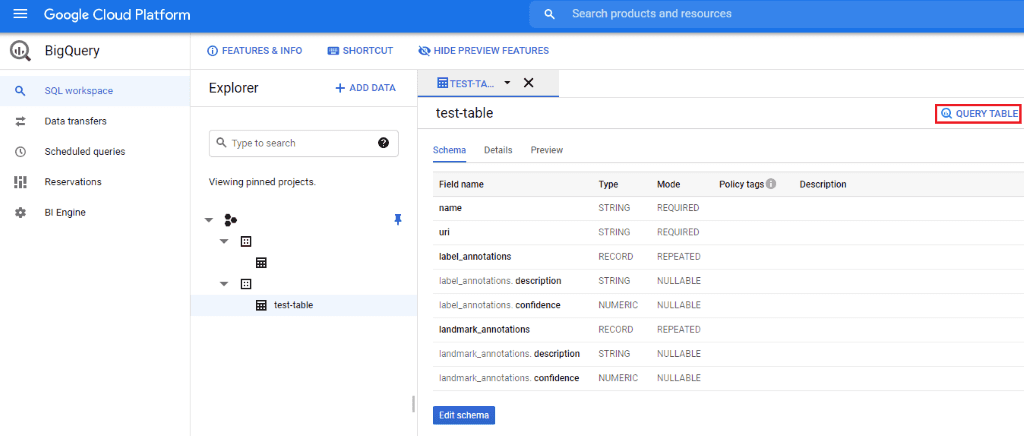

- Select the hamburger menu from the upper left-hand corner of the Google Cloud Platform console.

- Click BigQuery.

- Select SQL workspace.

- Select the BigQuery table you created earlier and press the blue QUERY TABLE button. (image 12)

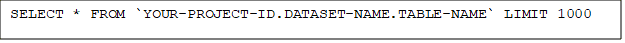

In the screen that appears, press the blue RUN button and fill out the query. For testing purposes, we can use the following command:

Make sure to replace the placeholder values in the query with references to your project name, dataset name, and table name. (image 13).

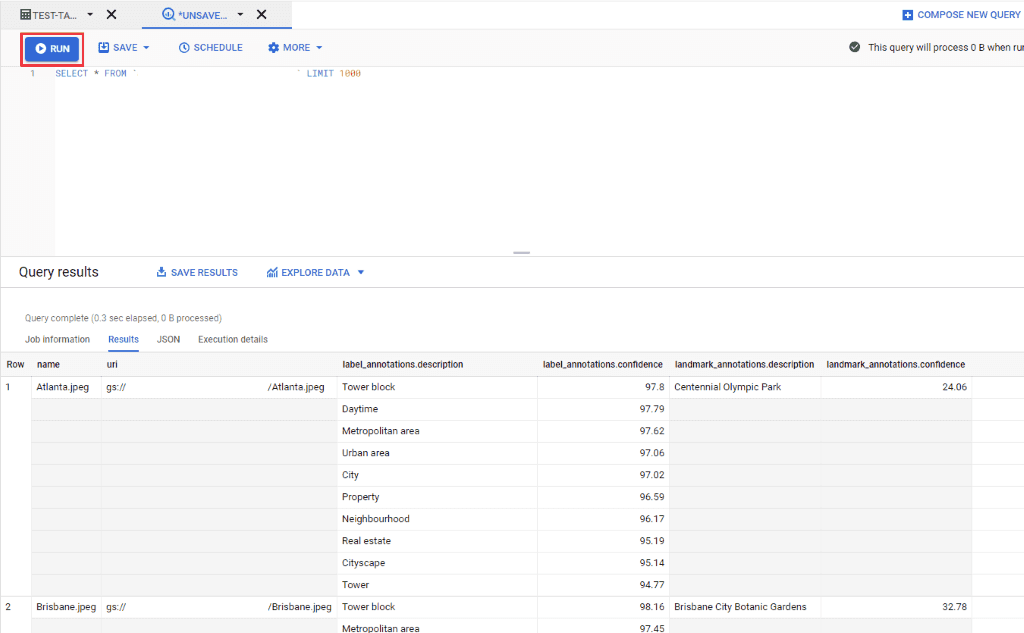

Image 13:

The query will take a few seconds to run. Once completed, we can see information about all of the images we’ve uploaded to our Cloud Storage bucket. This will trigger every time a new image is uploaded, so give it a try by uploading four or five images and re-running the query. We can see the label description, landmark description, and the confidence that the Vision API has in the label and landmark.

Clean Up

Once you are done with the exercise, make sure to clean up. Delete any BigQuery tables you created, along with any images you uploaded to Cloud Storage, and push your function to a git repository. If you created a service account for local development, consider deleting it now.Suggested Functionality

This exercise focused on standing up a function to capture information about images uploaded to Google Cloud Storage. However, there are many other capabilities you could add to your function including:- Edit the code to capture all text in an image.

- Store values from the Vision API into a SQL Database for later analysis or retrieval.

- Attach our BigQuery table to a data visualization tool such as Data Studio.

- Add robust logging and retry logic to our code to make it more production-ready.