As enterprises hand off more decisions to autonomous systems—from deploying code to trading assets—accuracy becomes more than just a performance metric. It’s a measure of trust. When an AI agent makes a wrong call, the cost is not just technical debt, but operational risk.

Major platforms are releasing orchestration software at a breakneck pace, and enterprises are discovering new, more complex use cases every day. AI agents now execute trades, manage production code, route cargo and passengers, and power emerging consumer robotics. In finance alone, agents operate across markets that move trillions daily (about $9.6T in foreign exchange, $200B in crypto, and $600B in U.S. equities). A one-point accuracy lift, applied at that scale, translates into real economic impact.

This rapid rise in autonomous decision-making is touching every domain. So as the number and sophistication of agents grows, the essential question remains: How accurate are your agents—and how much can you trust their decisions?

Understanding Orchestration

Agent orchestration is the system logic that coordinates multiple tools and model calls, deciding which tool to use, in what order, and how to combine results into a coherent solution.

Think of it as the operating system for AI agents. It doesn’t just run a model; it manages a sequence of reasoning, execution, and validation steps that together determine whether an agent reaches the right answer.

At AHEAD, we focus less on novelty for its own sake and more on reliable problem-solving. While many enterprises experiment with generative AI, few have moved beyond pilots. MIT recently reported that the vast majority of GenAI projects at large companies fail to reach production – a sign that ideation is outpacing execution.

To ground the conversation in evidence, we conducted a benchmark of several leading orchestration platforms, examining how increasing task complexity impacts reliability.

Inside the Benchmark: The ‘Agent Olympics’

The framework is intentionally simple: equip each platform with the same 50 tools and ask them to solve 50 problems with known answers — think of it as an ‘Agent Olympics.’

For example, a task might require an agent to parse a string, apply a numeric transformation, then validate a logical condition across steps. As problems increased in complexity (more steps, stricter dependencies), we observed which platforms sustained accuracy.

Example Tasks

- Parse → Transform: “Extract the integer from Value: 0037 and add 5.” → ParseInteger → Add(5) → 42

- Fetch → Fetch → Combine: “What is Var_A × Var_C?” → GetVar_A → GetVar_C → Multiply(A, C) → answer

Evaluation Principles

- Tool Orthogonality: We designed tools with clear, non-overlapping purposes to test orchestration skill rather than tool-selection confusion. Future studies will introduce overlapping tools to evaluate selection pressure.

- Semantic Accuracy: We scored answers by meaning (e.g., “9” vs. “9.0” both count) to capture the intent of correct reasoning rather than formatting quirks.

- Repeatability: Each orchestrator ran three times across the evaluation to check for stability and reproducibility (see white paper for details).

- Orchestrator Implementation: Each platform was implemented as code in a version-controlled harness with fixed LLM settings (GPT-4o-mini, temperature=0), and uniform timeouts/retries. This keeps comparisons fair and reproducible while ensuring that differences reflect orchestration behavior rather than environment drift.

What We Found

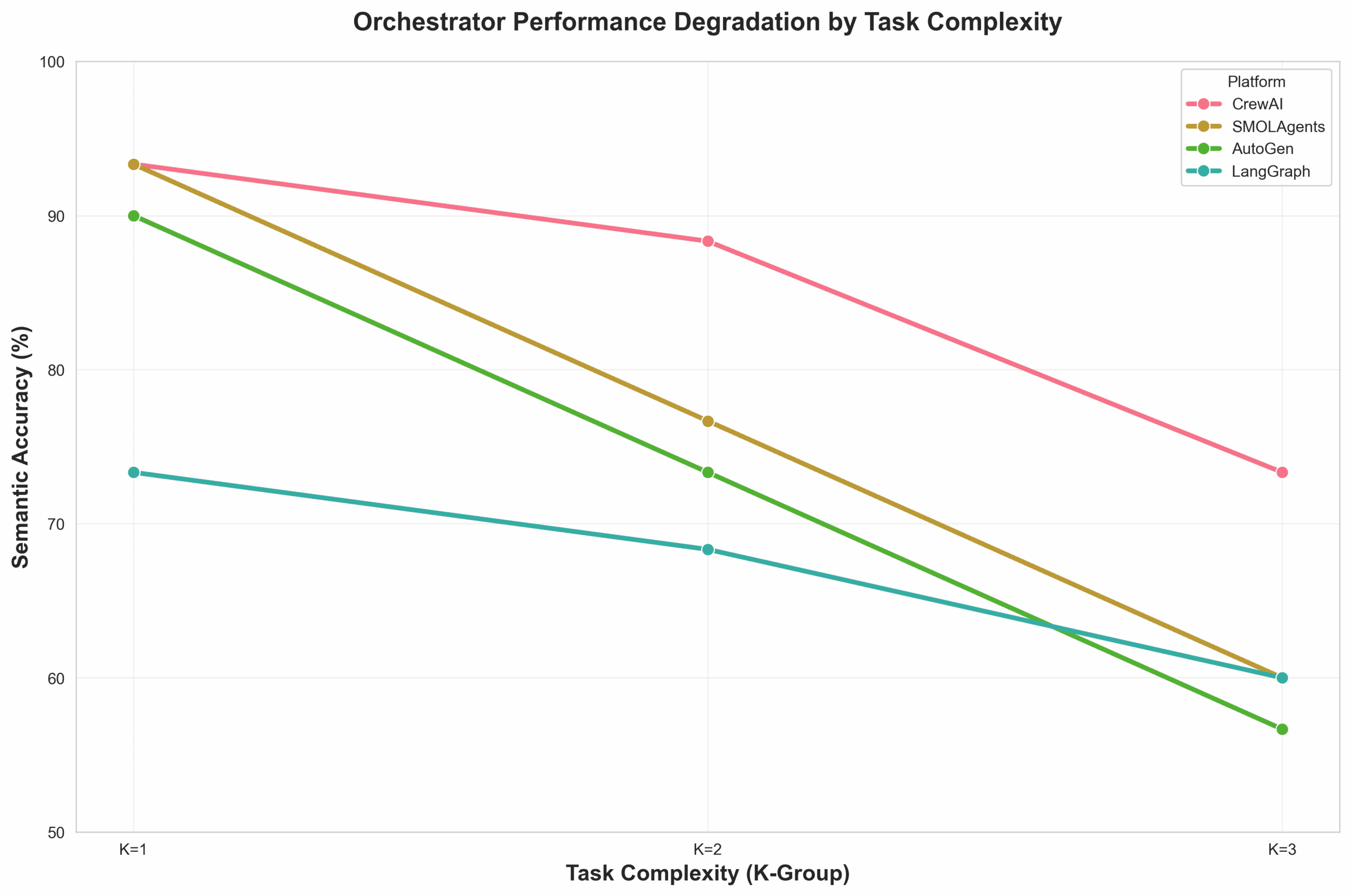

Below is a plot of semantic accuracy versus task complexity for each platform. As expected, accuracy declines as complexity increases across all systems—an important confirmation that complexity taxes orchestration regardless of vendor. The study surfaces clear separation between platforms under controlled conditions.

Accuracy alone, however, isn’t the full story. In our broader evaluation, we also measured cost per task, tools invoked per solution, and time to completion, to name a few. Each metric provides a different view into efficiency and scalability.

For enterprise decision-makers, this has direct implications: an orchestrator’s ability to sustain accuracy under complexity determines whether AI agents can scale safely beyond proof-of-concept. In tightly coupled workflows, such as IT remediation, logistics optimization, or automated compliance, small reliability differences can cascade into large operational gaps.

Choosing the Right Platform

We do not recommend selecting a platform solely on benchmark performance. Accuracy is critical, but so are use case fit, deployment model (open versus managed), integration needs, UI expectations, and data governance constraints.

The goal isn’t to crown a single winner, but rather to help teams align platform choice with problem shape and operational reality. It is also important to note that as these systems increase in complexity, one can likely identify performance improvements through the implementation of multi-agent frameworks. However, more testing is required to track optimal use cases.

At AHEAD, we help clients translate benchmarks like this into strategy: evaluating architectures, testing orchestration logic, and designing guardrails so agentic systems perform predictably in production.

Where This Is Headed

This benchmark is part of an ongoing research initiative. Next iterations will introduce overlapping tools, additional LLM families, and tighter operational constraints. Our objective is to raise the bar for rigorous, transparent evaluation so enterprises can deploy agentic systems with confidence, not speculation.

If you’re exploring agentic AI architectures, we’d love to compare notes and share the complete methodology. Check out the full whitepaper and methodology, or get in touch with AHEAD to learn more.

About the author

Kurt Boden

Senior Technical Consultant

Kurt brings 10+ years of experience delivering AI solutions across industries including insurance, technology startups, and social media platforms. From predictive modeling for natural disasters to real-time NLP for document understanding, Kurt has architected and led AI initiatives from concept to production. He excels at translating business needs into practical AI solutions, combining strong client-focused communication with rigorous evaluation of real-world impact. Kurt’s background spans AI architecture, technical leadership, and entrepreneurship, with hands-on expertise in building models, data infrastructure, and end-to-end AI systems.

;

; ;

; ;

;