SCALEIO TECH OVERVIEW AND CONCEPTS – SDS-SAN VS SDS-ARRAY

Software Defined Storage (SDS) Evolution

No matter what avenue you choose to access your storage, there is software in some shape or form that is providing you access to your information. From traditional storage frames to large enterprise NAS arrays, we’re seeing software define how we deliver our data. We’ve seen thin provisioning, over provisioning, tiered-storage, single filesystem NAS (OneFS and Isilon as an example), large scalable object stores and much more. The software running on these platforms was still tied to the hardware somehow, and the platform was still rigid and in the control of the manufacturer, not the consumer.

The key differences between these legacy platforms and the new SDS products has been the abstraction of storage access and data services almost completely to the software and hypervisor levels, which has led to a shift of commodity based hardware to provide our storage capabilities and has given the customer the power on when to grow, how to deploy and spend on their own timeline. Some technologies such as VSAN, require that all nodes within a VSAN cluster take part in the storage abstraction process if they want to access storage in a SDS format which takes away some previously mentioned benefits, but is still a top of the line product, just like anything else it has its appropriate place in the enterprise. ScaleIO differs in this point and it’s one of its strongest competitive advantages in the SDS marketplace, being able to maintain flexibility while providing abstracted storage services that are of enterprise resiliency and protection.

ScaleIO and SDS technologies generally fall in the AHEAD Cloud Delivery Framework (CDF) as part of our Hyperconverged Discussion set within our infrastructure group. We have seen the rise of HCI including both appliance and build your own solutions. As a critical stake-holder in the industry in this space, AHEAD has been working along with DellEMC to validate, understand, and determine the fit for ScaleIO as part of our CDF approach.

ScaleIO Architecture

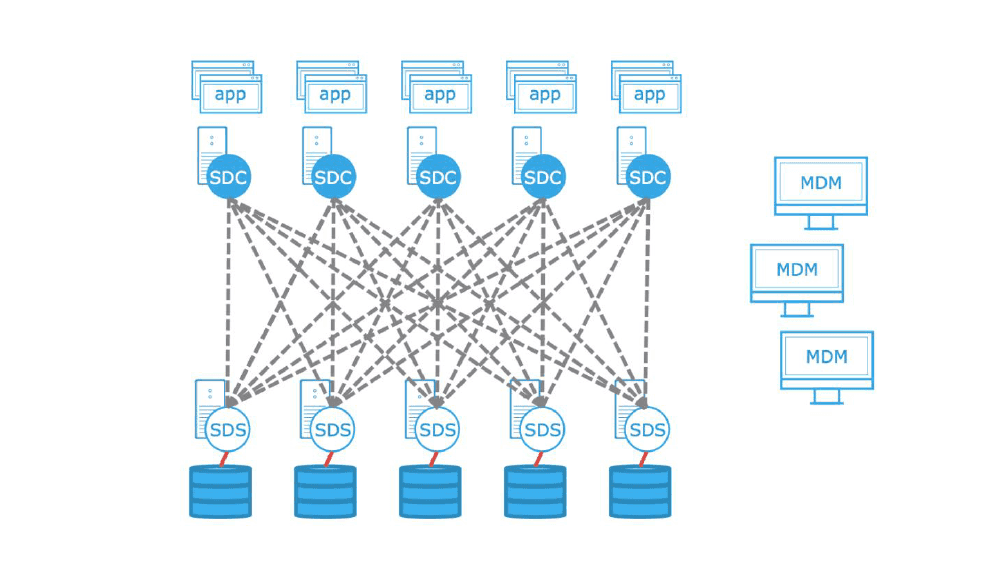

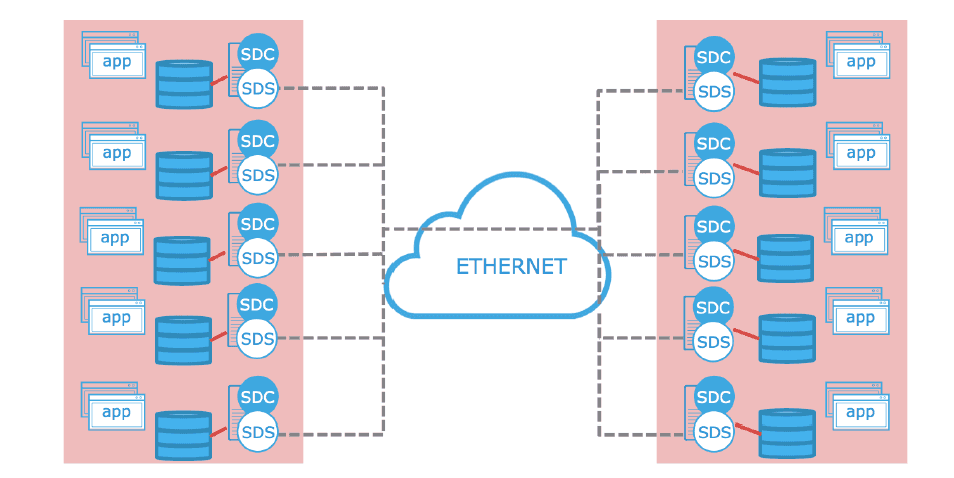

ScaleIO is broken down into 3 main components: The ScaleIO Data Client (SDC), ScaleIO Data Server (SDS) and the Meta Data Managers (MDM). The SDC acts as the block driver for a client (server) to access its block storage within the ScaleIO ecosystem. The SDC provides the operating system or hypervisor running it access to logical block devices called “volumes” which can be thought of as a LUN in a traditional SAN environment. The SDC’s have knowledge through the driver on which SDS’s hold their block data, so multipathing can be accomplished natively through the SDC itself.

The SDS is where most of the magic happens in ScaleIO, providing the capability for the underlying storage hardware to participate in the ScaleIO cluster. The SDS, being the server, provides the entirety of the storage to the SDC’s that participate in the cluster can access. The SDS is responsible for activities such as creating and modifying storage pools, configuring protection domains and laying out fault sets. All the SDS’s in the cluster communicate in a full mesh to optimize the rebuild, rebalance and I/O parallel access functions.

Lastly is the MDM which handles the SDC to SDS data mapping (upon volume creation and updated as necessary if any failures occur), holds the idea of quorum in the ScaleIO cluster and handles all orchestration of rebuild processes if a failure occurs in the environment.

With the popularity and reduction of cost of 10Gb Ethernet which ScaleIO can run atop of we can provide a high-speed storage network on the same infrastructure that is already being purchased for traditional network needs. As far as I’m concerned, this is a much more elegant solution to consolidating the network needs of storage and servers than what FCoE was to promise us in the data center. Couple that with some of the unique properties of ScaleIO and you have one valuable solution.

ScaleIO is unique but needs to be accepted for its uniqueness

So far, ScaleIO sounds pretty straight forward, so where is the value and importance of the product? For the past ~20 years of storage, we’ve been deploying storage services in the same way. A host connects to a SAN switch. Switch to Storage. Storage delivers block needs and repeat the process for a new request. We need more storage? More capacity can be added to the array until capacity or performance is maxed out, then additional arrays will need to be added. Do we need more compute? We can buy more servers and load the application as necessary. Need more connections, buy more storage specific switching infrastructure. Where ScaleIO forms a paradigm shift, is that it allows us to place our storage within any existing component (if there’s room in the server) to dynamically add more capacity to our storage pool without buying more array based hardware, specifically utilizing disk capacity (HDDs, SSD’s, etc) that is presented to either the host OS or the hypervisor. This provides us an opportunity to release ourselves in some capacity from our SAN based switching, shrinking our fibre channel needs to only hardware that requires a storage array for some purpose (deduplication, Layer 2 FC extension, etc). Finally, it gives us the option if we so choose, to grow our environment in a very similar way to a hyperconverged platform without being tied to continually building it out that way. We can build nodes with minimal amounts of RAM and a large amount of storage for “storage nodes”. We can build in the opposite way with a large amount of compute and some storage so that we can run our applications locally. In short, ScaleIO is flexible.

ScaleIO also has the ability to scale, hence the name. Massively. With limits currently set at over 1,000 nodes, we can expand our new SDS capabilities to grow as much as our capacity, risk, protection or fault domain requirements mandate while still being agile and flexible in our deployment. Adding more nodes can add capacity and performance to the cluster reaching speeds as high if not higher than most enterprise storage arrays on the market. Relatively recent performance numbers have been put together by independent reviewers such as storagereview.com to show that the viability of the product with performance is in place but the market must accept the shift to such a different platform.

ScaleIO is not without its limits. Currently, in order to replicate, either Dell|EMC recoverpoint, vSphere Replication, Zerto or other replication software is needed in order to get your data to another secure location. There’s no de-duplication in the system, even when utilizing flash devices, so your capacity being bought is always 1:1. The most lacking portion of ScaleIO is what is seen with most other SDS technologies, is that the data services tend to be reduced requiring additional software products to get the job done. With that being said, allowing all of the data services to be flexibly delivered via software now provides customers more choice and capabilities without tying themselves to a specific manufacturer and only licensing the software that they need to consume. Flexibility of how we architect our solutions, especially in the cloud era of computing is important when controlling cost, growth and strategy.

SDS-Arrays or SDS-SAN?

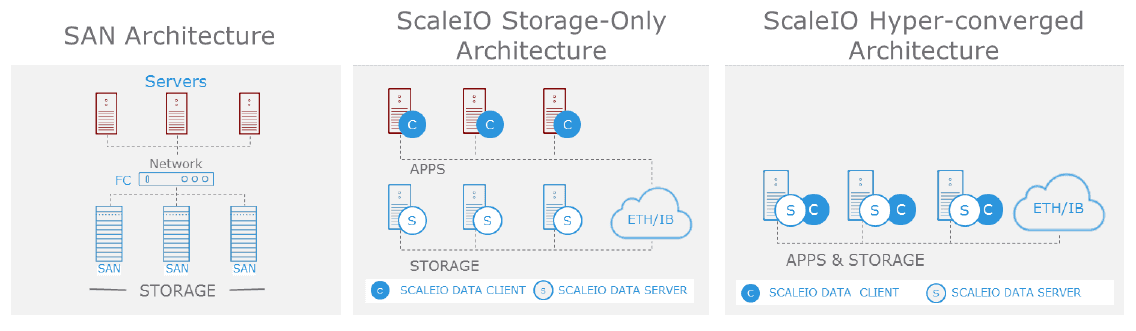

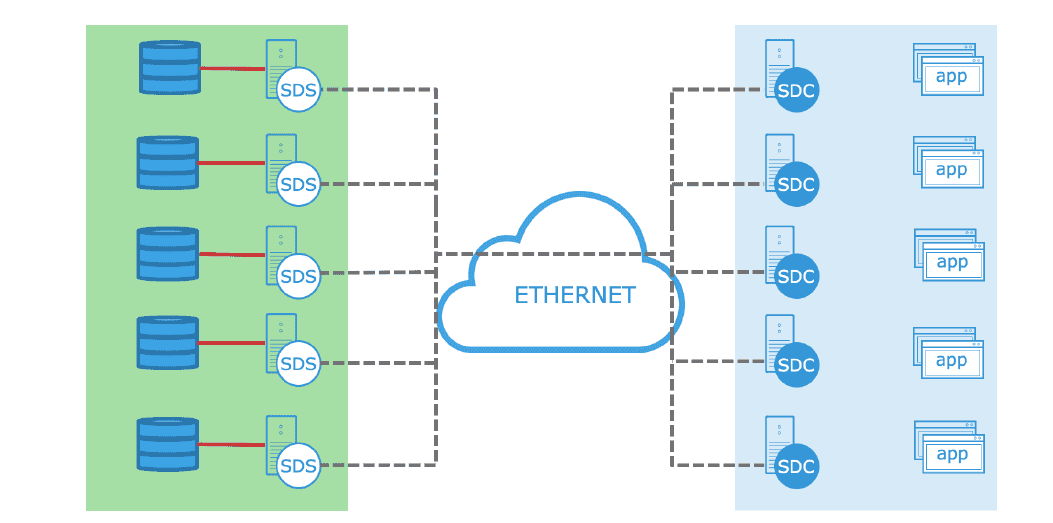

When deploying ScaleIO, there are 2 methods of implementing the product, as either a two-tier deployment or as a hyperconverged style implementation. These 2 methods are new to the industry, but the ideas and theory of each isn’t something new. In my mind, the two-tier approach is what I would call an SDS-Array, where we’re reaching for our block services on a dedicated grouping of hardware, very similar to what most environments are already used to in terms of storage capabilities. As an industry, we’re comfortable with the idea of a large, interconnected piece of metal providing our storage, but the logical collection of servers to provide the same service is relatively new, but they both accomplish the same task. The hyperconverged approach is exactly that, where the SDS and the SDC reside on the same piece of hardware, adding capacity to the storage pool and using the same capacity that it’s providing. In this deployment, our applications can reside on the same hardware as the capacity being provided, potentially assisting with data locality if that’s a concern.

Where ScaleIO differs the greatest from traditional SDS solutions, is that we can combine these 2 deployment styles into the same environment, essentially producing something that has been coined as an SDS-SAN. Some nodes may be only providing storage, some may only be consuming storage and others may be doing both. The possibilities are astounding while still maintaining the flexibility and freedom that ScaleIO promotes.

Dell|EMC also has 3 ways of deploying the ScaleIO system, as either a software-only solution, their ScaleIO Ready Nodes or as a fully built VxRack Hyperconverged product through Dell’s VxRack System Flex 1000. Flexibility in the product is followed by flexibility in how we can deliver the product. Give Yon Ubago’s AHEAD blog post a read which covers the some history of Dell|EMC’s converged story and how it ties into hyperconverged in the future.

As a former storage manager in the financial industry, being able to adjust my purchasing timelines and flatten my run rate from a CapEx standpoint with node based storage is something that was greatly desired. Dell|EMC’s Isilon was the first platform that truly allowed me to buy each node as an individual asset, reducing the need to always purchase in large refreshes or to buy capacity that wasn’t needed at that moment. ScaleIO has now done the same thing and then some for the needs of block storage within the enterprise. We can now purchase on whatever schedule is deemed fit from a forecasting standpoint and only buy what we need in whatever format that we desire. Join that to the fact that we don’t need to license complete arrays with replication software, etc. we now have a financially flexible, performant storage platform for the future of the Enterprise.

While ScaleIO hasn’t been widely adopted in the Enterprise just yet, I think that this is one of Dell|EMC’s most strategic storage products. Enabling our customers to maintain flexibility and enterprise resiliency is empowering and is leaps ahead of the SDS competition providing relevancy for Dell|EMC in the enterprise storage space and pushing forward with SDS and the forthcoming future in the industry. Tie this with the server specs that Dell has in place and the capability to deliver commodity grade hardware either in an independent of converged fashion and you have one heck of a transformational story.

Want to dive deeper into the Hyperconverged discussion and how AHEAD can help your organization? Contact us to learn more about the AHEAD Cloud Delivery Framework or to schedule a briefing!